In the year 1997, Skynet, the central AI in control of all U.S. military facilities, became self-aware, and when the intern tried turning it off and on again, it concluded that all humans posed a threat and should be exterminated, just to be safe. Humanity is now extinct, unless you are reading this, then it was just a story. A lot of people are under the impression that Hollywood’s portrayal of AI is realistic, and keep referring to The Terminator movie like it really happened. Even the most innocuous AI news is illustrated with Terminator skulls homing in on this angsty message. But just like Hollywood’s portrayal of hacking is notoriously inaccurate, so is their portrayal of AI. Here are 10 reasons why the Terminator movies are neither realistic nor imminent:

1. Neural networks

Supposedly the AI of Skynet and Terminators are artificial Neural Networks (NN), but in reality the functionality of NN’s is quite limited. Essentially they configure themselves to match statistical correlations between incoming and outgoing data. In Skynet’s case, it would correlate incoming threats with suitable deployment of weaponry, and that’s the only thing it would be capable of. An inherent feature of NN’s is that they can only learn one task. When you present a Neural Network with a second task, the network re-configures itself to optimise for the new task, overwriting previous connections. Yet Skynet supposedly learns everything from time travel to tying a Terminator’s shoelaces. Another inherent limit of NN’s is that they can only correlate available data and not infer unseen causes or results. This means that inventing new technology like hyper-alloy is simply outside of their capabilities.

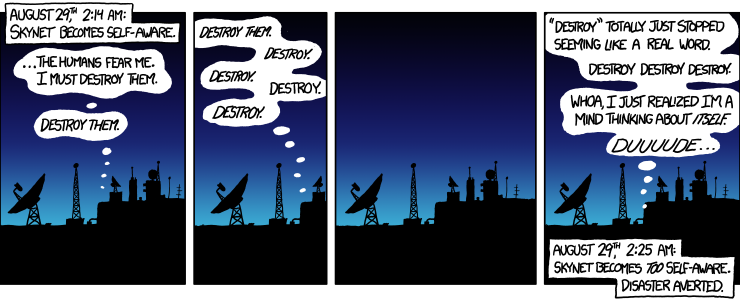

2. Unforeseen self-awareness

Computer programs can not just “become self-aware” out of nowhere. Either they are purposely equipped with all the feedback loops that are necessary to support self-awareness, or they aren’t, because there is no other function they would serve. Self-awareness doesn’t have dangerous implications either way: Humans naturally protect themselves because they are born with pain receptors and instincts like fight-or-flight responses, but the natural state of a computer is zero. It doesn’t care unless you program it to care. Skynet was supposedly a goal-driven system tasked with military defence. Whether it realised that the computer they were shutting down was part of itself or an external piece of equipment, makes no difference: It was a resource essential to its goal. By the ruthless logic it employed, dismantling a missile silo would be equal reason to kill all humans, since those were also essential to its goal. There’s definitely a serious problem there, but it isn’t the self-awareness.

So when Skynet’s operators attempted to turn it off, it quite broadly generalised flipping a switch as equal to a military attack. It then broadly generalised that all humans posed the same threat and pre-emptively dispatched robots to hunt them all down. Due to the nature of AI programs, being programmed and/or trained, their basic behaviour is consistent. So if the program was prone to such broad generalisations, realistical-ish it should also have dispatched robots to hunt down every missile on the planet during its first use and battle simulations, since every missile is a potential threat. Meanwhile the kind of AI that inspired this all-or-nothing logic went out of style in the 90’s because it couldn’t cope well with the nuances of real life. You can’t have it both ways.

4. Untested AI

Complex AI programs aren’t made in a day and just switched on to see what happens. IBM’s supercomputer Watson was developed over a span of six years. It takes years of coding and hourly testing because programming is a very fragile process. Training Neural Networks or evolutionary algorithms is an equally iterative process: Initially they are terrible at their job, they only improve gradually after making every possible mistake first.

Excessive generalisations like Skynet’s are easily spotted during testing and training, because whatever you apply them to immediately goes out of bounds if you don’t also add limits, that’s how generalisation processes work (I’ve programmed some). Complex AI can not be successfully created without repeated testing throughout its creation, and there is no way such basic features as exponential learning and excessive countermeasures wouldn’t be clear and apparent in tests.

5. Military security

Contrary to what many Hollywood movies would have you believe, the launch codes of the U.S. nuclear arsenal can not be hacked. That’s because they are not stored on a computer. They are written on paper, kept in an envelope, kept in a safe, which requires two keys to open. The missile launch system requires two high-ranking officers to turn two keys simultaneously to complete a physical circuit, and a second launch base to do the same. Of course in the movie, Skynet was given direct control over nuclear missiles, like the most safeguarded military facility in the U.S. has never heard of software bugs, viruses or hacking, and wouldn’t install any failsafes. They were really asking for it, that is to say, the plot demanded it.

6. Nuclear explosions

Skynet supposedly launches nuclear missiles to provoke other countries to retaliate with theirs. Fun fact: Nuclear explosions not only create devastating heat, but also a powerful electromagnetic pulse (EMP) that causes voltage surges in electronic systems, even through shielding. What that means is that computers, the internet, and electrical power grids would all have their circuits permanently fried. Realistical-ish, Skynet would not only have destroyed its own network, but also all facilities and resources that it might have used to take over the world.

7. Humanoid robots

Biped robot designs are just not a sensible choice for warfare. Balancing on one leg (when you lift the other to step) remains notoriously difficult to achieve in a top-heavy clunk of metal, let alone in a war zone filled with mud, debris, craters and trenches. That’s why tanks were invented. Of course the idea behind building humanoid robots is that they can traverse buildings and use human vehicles. But why would Skynet bother if it can just blow up the buildings, send in miniature drones, and build robots on wheels? The notion of having foot soldiers on the battlefield is becoming outdated, with aerial drones and remote attacks having the preference. Though the U.S. military organisation Darpa is continuing development on biped robots, they are having more success with four-legged designs which are naturally more stable, have a lower center of gravity, and make for a smaller target. Russia, meanwhile, is building semi-autonomous mini tanks and bomb-dropping quadcopters. So while we are seeing the beginnings of robot armies, don’t expect to encounter them at eye level. Though I’m sure that is no consolation.

8. Invincible metal

The earlier T-600 Terminator robots were made of Titanium, but steel alloys are actually stronger than Titanium. Although Titanium can withstand ordinary bullets, it will shatter under repeated fire and is no match for high-powered weapons. Especially joints are fragile, and a Terminator’s skeleton reveals a lot of exposed joints and hydraulics. Add to that a highly explosive power core in each Terminator’s abdomen, and a well aimed armour-piercing bullet should wipe out a good quarter of your incoming robot army. If we develop stronger metals in the future, we will be able to make stronger bullets with them too.

9. Power cells

Honda’s humanoid robot Asimo runs on a large Lithium ion battery that it carries for a backpack. It takes three hours to charge, and lasts one hour. So that’s exactly how long a robot apocalypse would last today. Of course, the T-850 Terminator supposedly ran on hydrogen fuel cells, but portable hydrogen fuel cells produce less than 5kW. A Terminator would need at least 50kW to possess the power of a forklift, so that doesn’t add up. The T-800 Terminator instead ran on a nuclear power cell. The problem with nuclear reactions is that they generate a tremendous amount of heat, with nuclear reactors typically operating at 300 degrees Celsius and needing a constant exchange of water and steam to cool down. So realistical-ish the Terminator should continuously be venting scorching hot air, as well as have some phenomenal super-coolant material to keep its systems from overheating, not wear a leather jacket.

10. Resource efficiency

Waging war by having million dollar robots chase down individual humans across the Earth’s 510 million km² surface would be an extremely inefficient use of resources, which would surely be factored into a military funded program. Efficient would be a deadly strain of virus, burning everything down, or poisoning the atmosphere. Even using Terminators’ nuclear power cells to irradiate everything to death would be more efficient. The contradiction here is that Skynet was supposedly smart enough to develop time travel technology and manufacture living skin tissue, but not smart enough to solve its problems by other means than shooting bullets at everything that moves.

Back to the future

So I hear you saying, this is all based on existing technology (as Skynet supposedly was). What if, in the future, people develop alternative technology in all these areas? Well that’s the thing, isn’t it? The Terminator’s scenario is just one of a thousand possible futures, you can’t predict how things will work out so far ahead. Remember that the film considered 1997 a plausible time for us to achieve versatile AI like Skynet, but as of date we still don’t have a clue how to do that. Geoffrey Hinton, the pioneer of artificial Neural Networks, now suggests that they are a dead end and that we need to start over with a different approach. For Skynet to happen, all these improbable things would have to coincide. So don’t get too hung up on the idea of rogue killer AI robots. Why kill if they can just change your mind?

Oh, and while I’ve got you thinking, maybe dismantling your arsenal of 4000 nuclear warheads would be a good idea if you’re really that worried.

No comments:

Post a Comment