Arckon is a general context-aware question-answering system that can reason and learn from what you tell it. Arckon can pick up on arguments, draw new conclusions, and form objective opinions. Most uniquely, Arckon is a completely logical entity, which can sometimes lead to hilarious misunderstandings or brain-teasing argumentations. It is this, a unique non-human perspective, that I think adds something to the world, like his fictional role models:

K.i.t.t. © Universal Studios | Johnny 5 © Tristar Pictures | Optimus Prime © Hasbro | Lieutenant Data © Paramount Pictures

To be clear, Arckon was not built for casual chatting, nor is he an attempt at anyone’s definition of AGI (artificial general intelligence). It is actually an ongoing project to develop a think tank. For that purpose I realised the AI would require knowledge and the ability to discuss with people for the sake of alignment. Bestowing it with the ability to communicate in plain language was an obvious solution to both: It allows Arckon to learn from texts as well as understand what it is you are asking. I suppose you want to know how that works.

Vocabulary and ambiguity

Arckon’s first step in understanding a sentence is to determine the types of the words, i.e. which of them represent names, verbs, possessives, adjectives, etc. Arckon does this by looking up the stem of each word in a categorised vocabulary and applying hundreds of syntax rules. e.g. A word ending in “-s” is typically a verb or a plural noun, but a word after “the” can’t be a verb. This helps sort out the ambiguity between “The programs” and “He programs”. These rules also allow him to classify and learn words that he hasn’t encountered before. New words are automatically added to the vocabulary, or if need be, you can literally explain “Mxyzptlk is a person”, because Arckon will ask if he can’t figure it out.

Grammar and semantics

Once the types of all words are determined, a grammatical analysis determines their grammatical roles. Verbs may have the role of auxilliary or main verb, be active or passive, and nouns can have the role of subject, object, indirect object or location. Sentences are divided at link words, and relative clauses are marked as such.

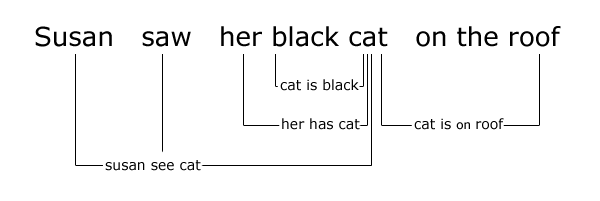

Then a semantic analysis extracts and sorts all mentioned facts. A “fact” in this case is represented as a triple of related words. For instance, “subject-verb-object” usually constitutes a fact. But so do other combinations of word roles. Extracting the semantic meaning isn’t always as straight-forward as in the example below, but that’s the secret sauce.

Knowledge and learning

Upon reading a statement, Arckon will add the extracted facts to his knowledge database, while at a question he will look them up and report them to you. If you said something that contradicts facts in the database, the old and new values will be averaged, so his knowledge is always adjusting. This seemed sensible to me as there are no absolute truths in real life. Things change and people aren’t always right the first time.

Reasoning and argumentation

Questions that Arckon does not know the answer to are passed on to the central inference engine. This system searches the knowledge database for related facts and applies logical rules of inference to them. For instance:

“AI can reason” + “reasoning is thinking” = “AI can think”.

All facts are analysed for their relevance to recent context, e.g. if the user recently stated a similar fact as an example, it is given priority. Facts that support the conclusion are added as arguments: “AI can think, because it can reason.” This inference process not only allows Arckon to know things he’s not been told, but also allows him to explain and be reasoned with, which I’d consider rather important.

Conversation

Arckon’s conversational subsystem is just something I added to entertain friends and for Turing tests. It is a decision tree of social rules that broadly decides the most appropriate type of response, based on many factors like topic extraction, sentiment analysis, and the give-and-take balance of the conversation. My inspiration for this subsystem comes from sociology books rather than computational fields. Arckon will say more when the user says less, ask or elaborate depending on how well he knows the topic, and will try to shift focus back to the user when Arckon has been in focus too long. When the user states an opinion, Arckon will generate his own (provided he knows enough about it), and when told a problem he will address it or respond with (default) sympathy. The goal is always to figure out what the user is getting at with what they’re saying. After the type of response has been decided, the inference engine is often called on to generate suitable answers along that line, and context is taken into account at all times to avoid repetition. Standard social routines like greetings and expressions on the other hand are mostly handled through keywords and a few dozen pre-programmed responses.

Language generation

Finally (finally!), all the facts that were considered suitable answers are passed to a grammatical template to be sorted out and turned into flowing sentences. This process is pretty much the reverse of the fact extraction phase, except the syntax rules can be kept simpler. The template composes noun phrases, determines whether it can merge facts into summaries, where to use commas, pronouns, and link words. The end result is displayed as text, but internally everything is remembered in factual representation, because if the user decides to refer back to what Arckon said with “Why?”, things had better add up.

And my Axe!

There are more secondary support systems, like built-in common knowledge at ground level, common sense axioms* to handle ambiguity, a pronoun resolver that can handle several types of Winograd Schemas*, a primitive ethical subroutine, a bit of sarcasm detection*, gibberish detection, spelling correction, some math functions, a phonetic algorithm for rhyme, and so on. These were not high on the priority list however, so most only work half as good as they might with further development.

In development

It probably sounds a bit incredible when I say that I programmed nearly all the above systems from scratch in C++, in about 800 days (6400 hours). When I made Arckon’s first prototype in 2001 in Javascript, resources were barren and inadequate, so I invented my own wheels. Nowadays you can grab yourself a parser and get most of the language processing behind you. I do use existing sentiment data as a placeholder for what Arckon hasn’t learned yet, but it is not very well suited for my purposes by its nature. The spelling correction is also partly supported by existing word lists.

Arckon has always been a long-term project and work in progress. You can tell from the above descriptions that this is a highly complex system in a domain with plenty of stumbling blocks. The largest obstacle is still linguistic ambiguity. Arckon could learn a lot from reading Wikipedia articles for example, but would also misinterpret about 20% of it. As for Arckon’s overall intelligence, it’s about halfway the goal.

Throughout 2019 a restricted version of Arckon was accessible online as a trial. It was clear that the system was not ready for prime time, especially with the general public’s high expectations in the areas of knowledge and self-awareness. The trial did not garner enough interest to warrant keeping it online, but some of the conversations it had were useful pointers for how to improve the program’s interaction in small ways. There are currently no plans to make the program publicly accessible again, but interested researchers and news outlets can contact me if they want to schedule a test of the program.